Welcome to the ChETEC-INFRA information page for Viper, the University of Hull’s High Performance Compute (HPC) cluster.

Viper came online in 2016, with over 5,500 compute cores and dedicated GPU and high memory resources. Viper supports researchers from across the University of Hull, as well as specialist teaching requirements. In 2021, Viper is seeing an update with a number of new resources to further enhance research across the University.

Successful applications through ChETEC-INFRA to use Viper will have a University of Hull user account assigned at which point access to Viper will be possible. From this point access to Viper compute resource and storage, along with technical support resources will be provided in the same way as other University of Hull members.

HPC Support

Viper is supported by a dedicated HPC support team. As well as keeping Viper running, the team are available to provide workflow advice and support, with the team having specialist Research Software Engineers.

Successful applicants will be provided with temporary visiting academic accounts for the University, allowing them access to Viper. Once an account has been created, support for Viper is provided via the University’s User Support Portal available at https://support.hull.ac.uk.

If you have a query regarding the use of Viper for ChETEC-INFRA prior to having received a University of Hull account, then please contact the TA Manager as detailed on the ChETEC-INFRA website. If you still need assistance, please email viper@hull.ac.uk and be sure to include “ChETEC-INFRA” in the subject or body.

Additional information about working on Viper is available on the Viper wiki pages at http://hpc.mediawiki.hull.ac.uk/

Video tutorials including introductions to Linux and HPC and more advanced topics, are also available via MS Teams to University account holders, as is the link to the HPC teams weekly virtual user drop in sessions.

Software Modules

A list of currently available software modules can be found at https://hpc.hull.ac.uk/upload/module.html

Some applications are limited to certain users, groups, departments or workflows based on license restrictions. If the software required for your research has any license restrictions, please make sure this is highlighted in your ChETEC-INFRA application or contact the TA manager.

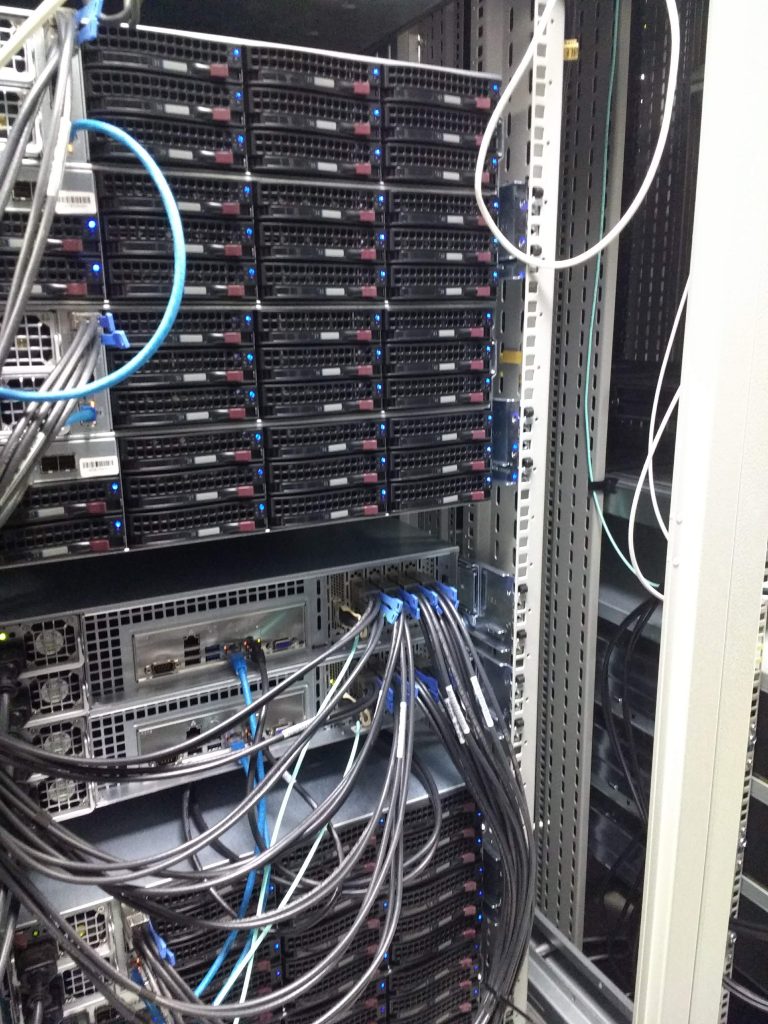

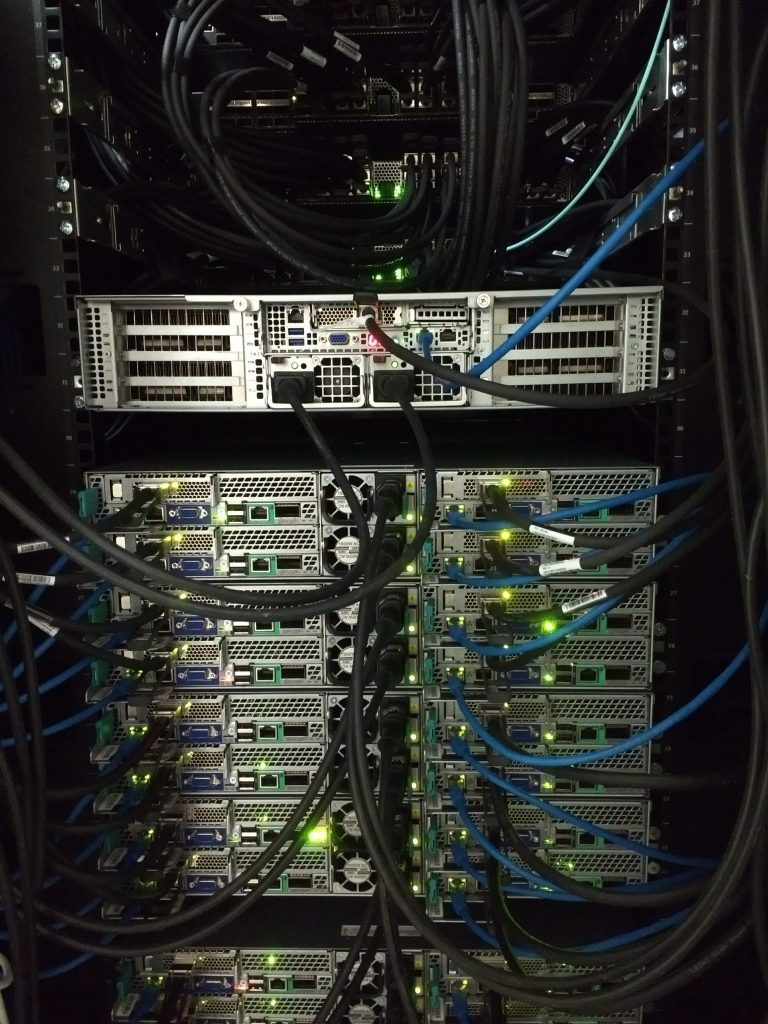

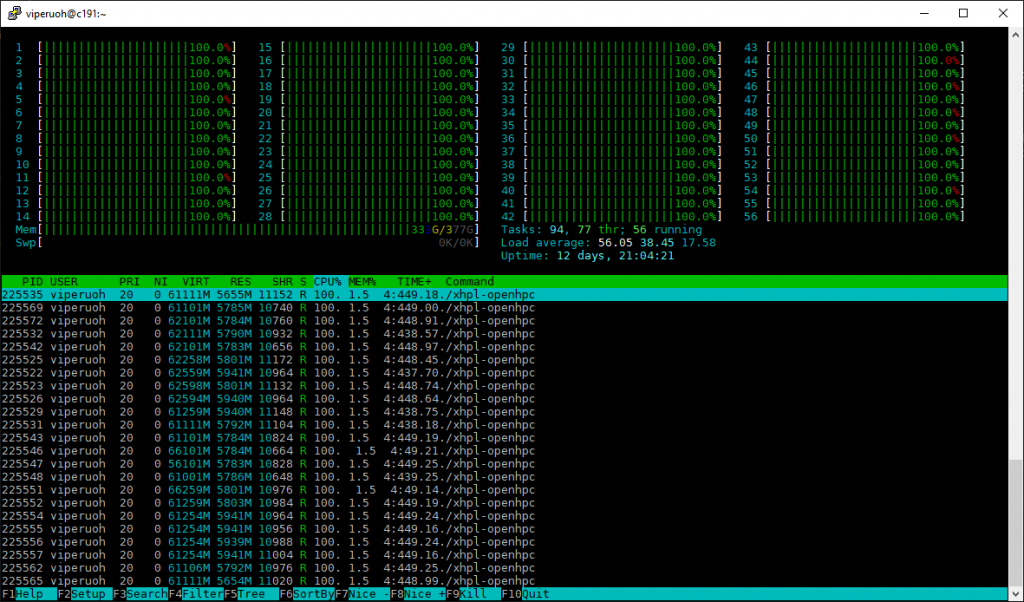

Viper Infrastructure

Viper can be used to run single core or single node tasks, making use of standard compute nodes with up to 28 cores and 128GB of RAM. Tasks that require large amounts of memory can make use of machines with up to 1TB of RAM. Parallel tasks can be run across nodes through the Intel Omni-Path 100Gbps interconnect.

Viper is based around the Linux operating system and uses the scheduler Slurm to control access to approximately 5,500 processing cores with the following configurations:

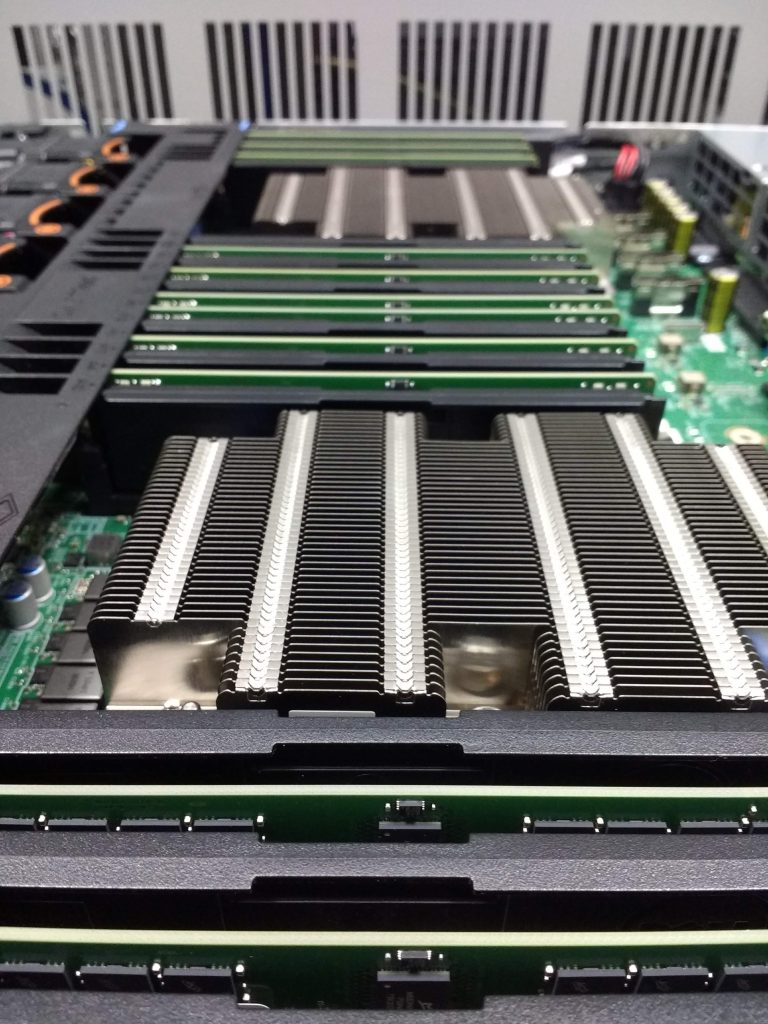

- 170 compute nodes, each with 2x 14-core Broadwell E5-2680v4 processors (2.4 –3.3 GHz), 128 GB DDR4 RAM

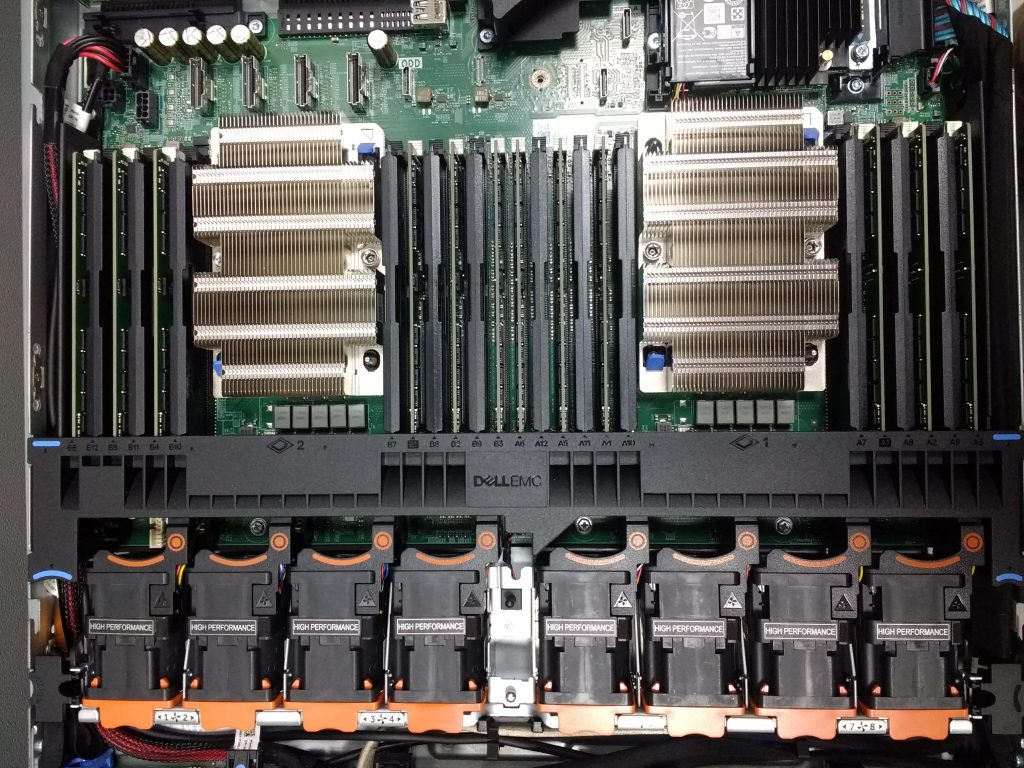

- 10 updated compute nodes, each with 2 x 28-core Intel Gold 6258R Cascade Lake processors (2.7 – 4.0 GHz), 384 GB PC4-2933 RAM)

- 4 High memory nodes, each with 4x 10-core Haswell E5-4620v3 processors (2.0 GHz), 1TB DDR4 RAM

- 4 GPU nodes, each identical to compute nodes with the addition of a Nvidia A40 GPU card

- 2 Visualisations nodes with 2x Nvidia GTX 980TI

- 500TB primary parallel file system (BeeGFS) and 200TB secondary parallel file system (BeeGFS)

Viper Image Gallery